fAIry tales

fAIry tales is a project where fairy tales are automatically generated based on algorithmically detected objects in mundane images. Using images from cocodataset.org I detect everyday objects using the YOLOv3 object detection algorithm. From these objects the title and the beginning of a story is generated and used as prompts for text generation model XLnet.

fAIry tales is a project where fairy tales are automatically generated based on algorithmically detected objects in mundane images. Using images from cocodataset.org I detect everyday objects using the YOLOv3 object detection algorithm. From these objects the title and the beginning of a story is generated and used as prompts for text generation model XLNet.

The project is an exploration of computer written fiction, and can be seen as a continuation of two previous projects of mine: Poems About Things that constructs quirky sentences about the objects it sees through the user's camera feed and Booksby.ai, an online bookstore which sells science fiction novels generated by an artificial intelligence.

As is the case with Poems About Things the texts produced are unique to the pictures you feed into the system, but instead of single line poetry these texts are longer and form more coherent stories. Where Booksby.ai focused on creating science fiction texts, fAIry tales aims to generate fairy tales, and succeeds in producing more coherent plot lines and sentences than Booksby.ai, although surprising and absurd narratives often arise due to the attempt to create adventures about boring objects.

How does it work?

Step 1: Detect objects in images

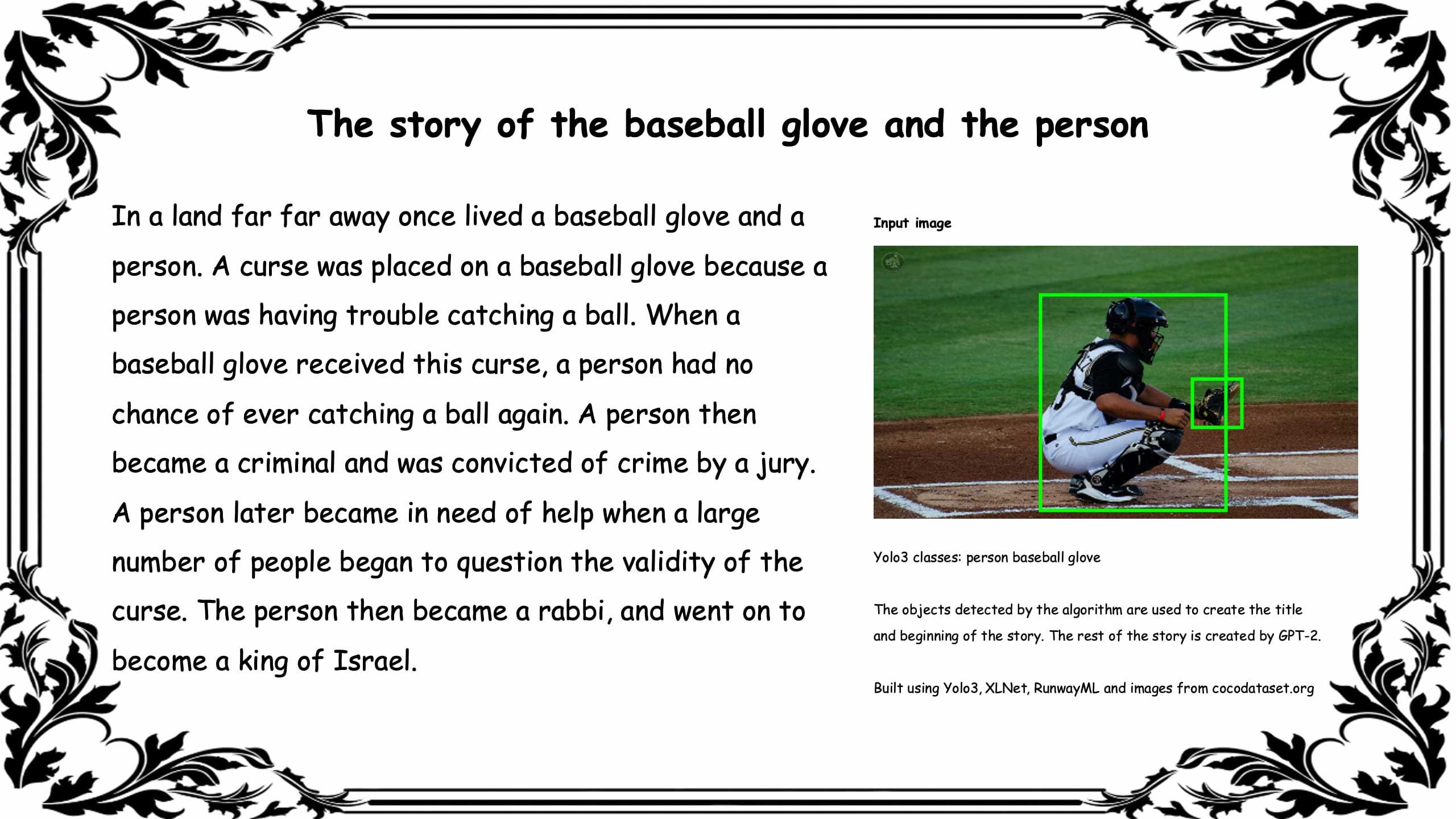

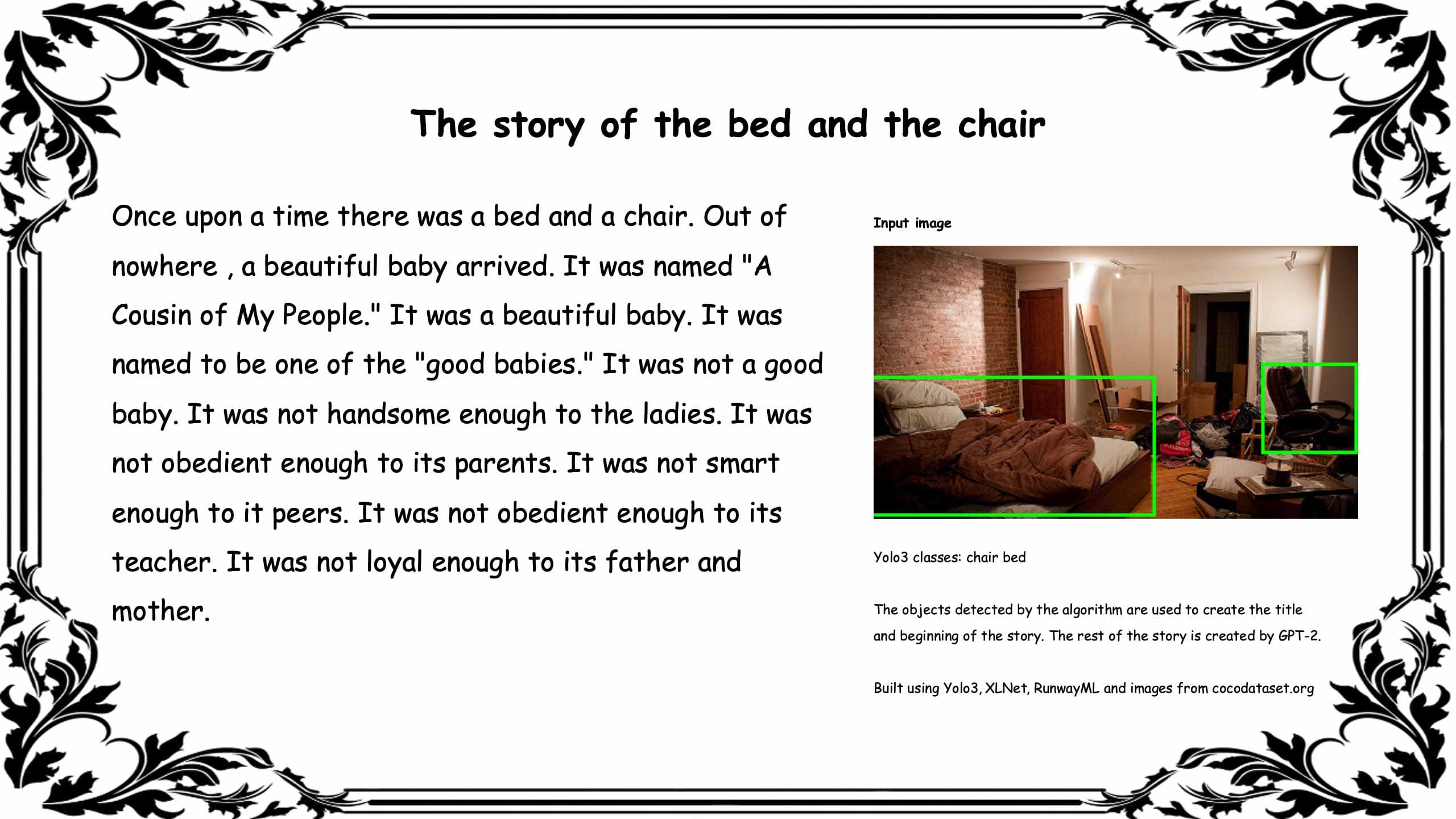

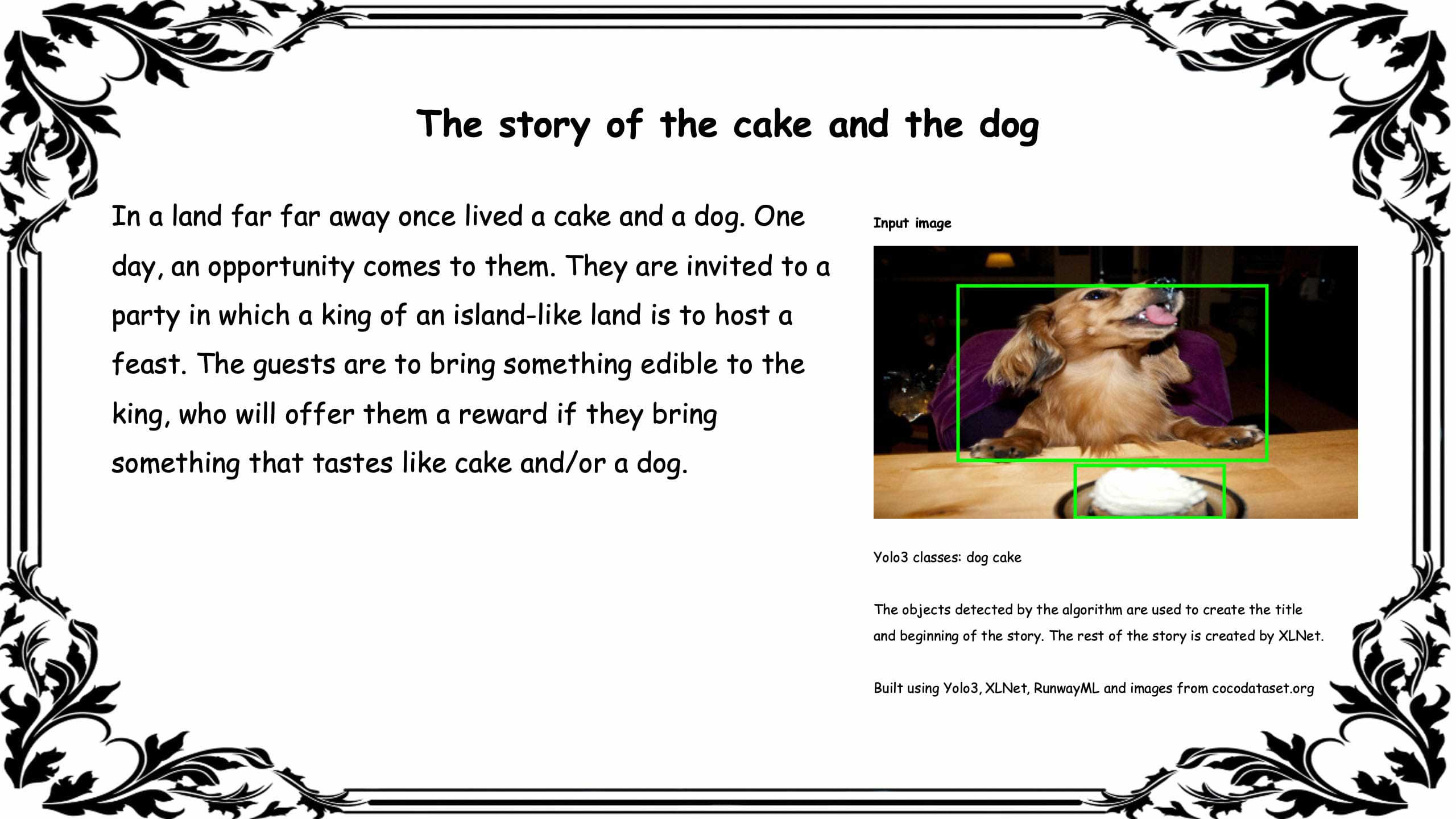

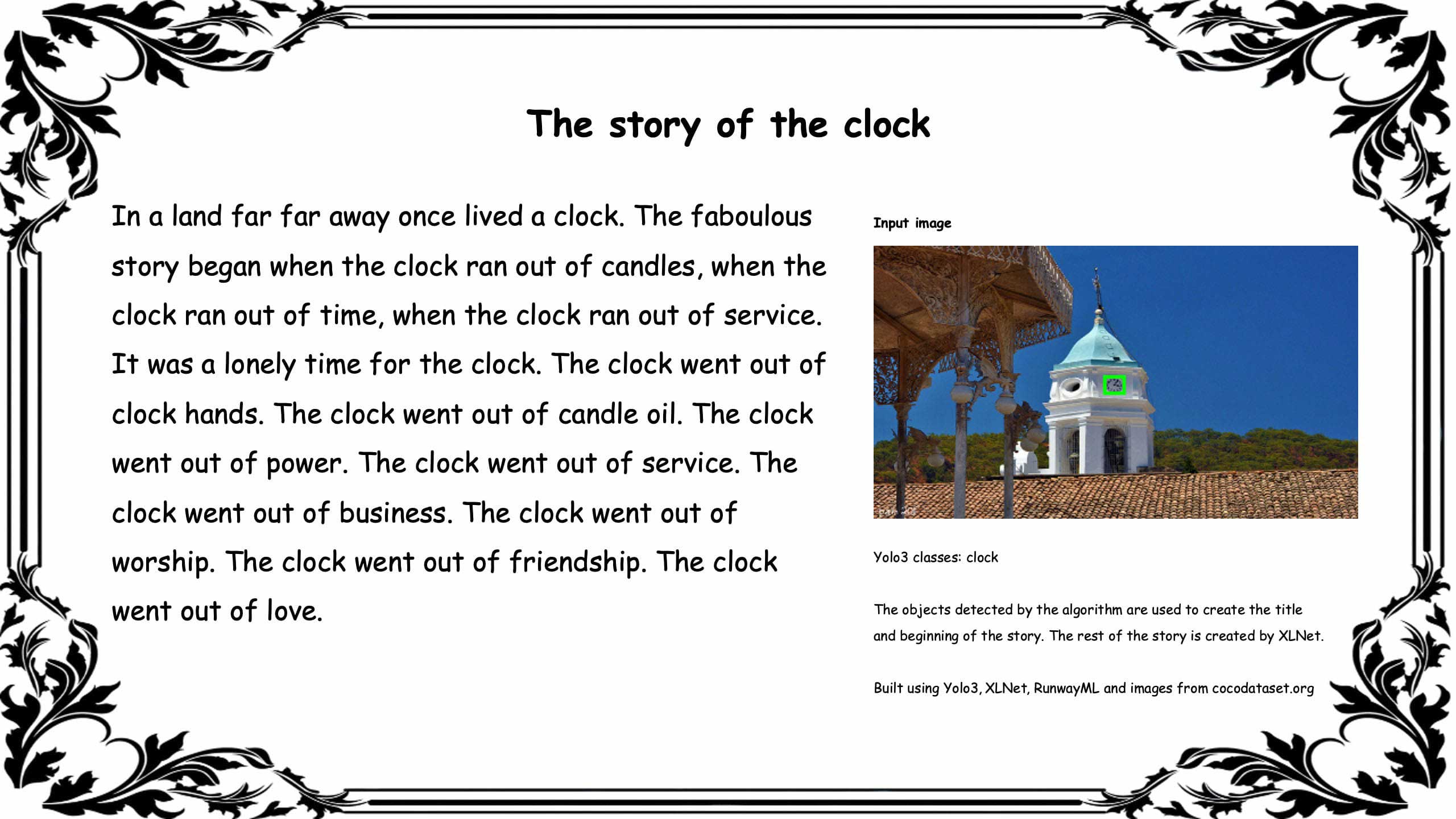

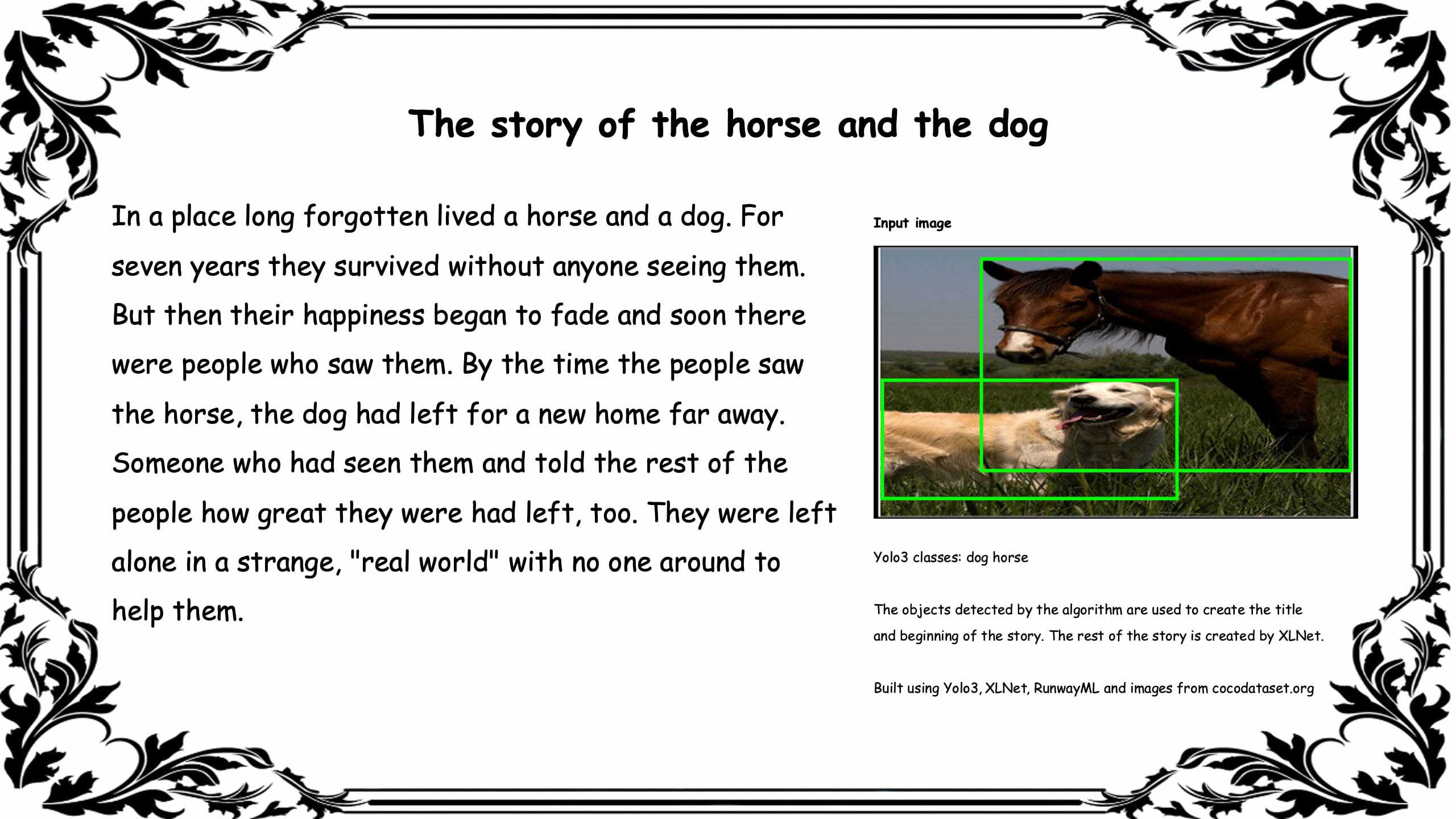

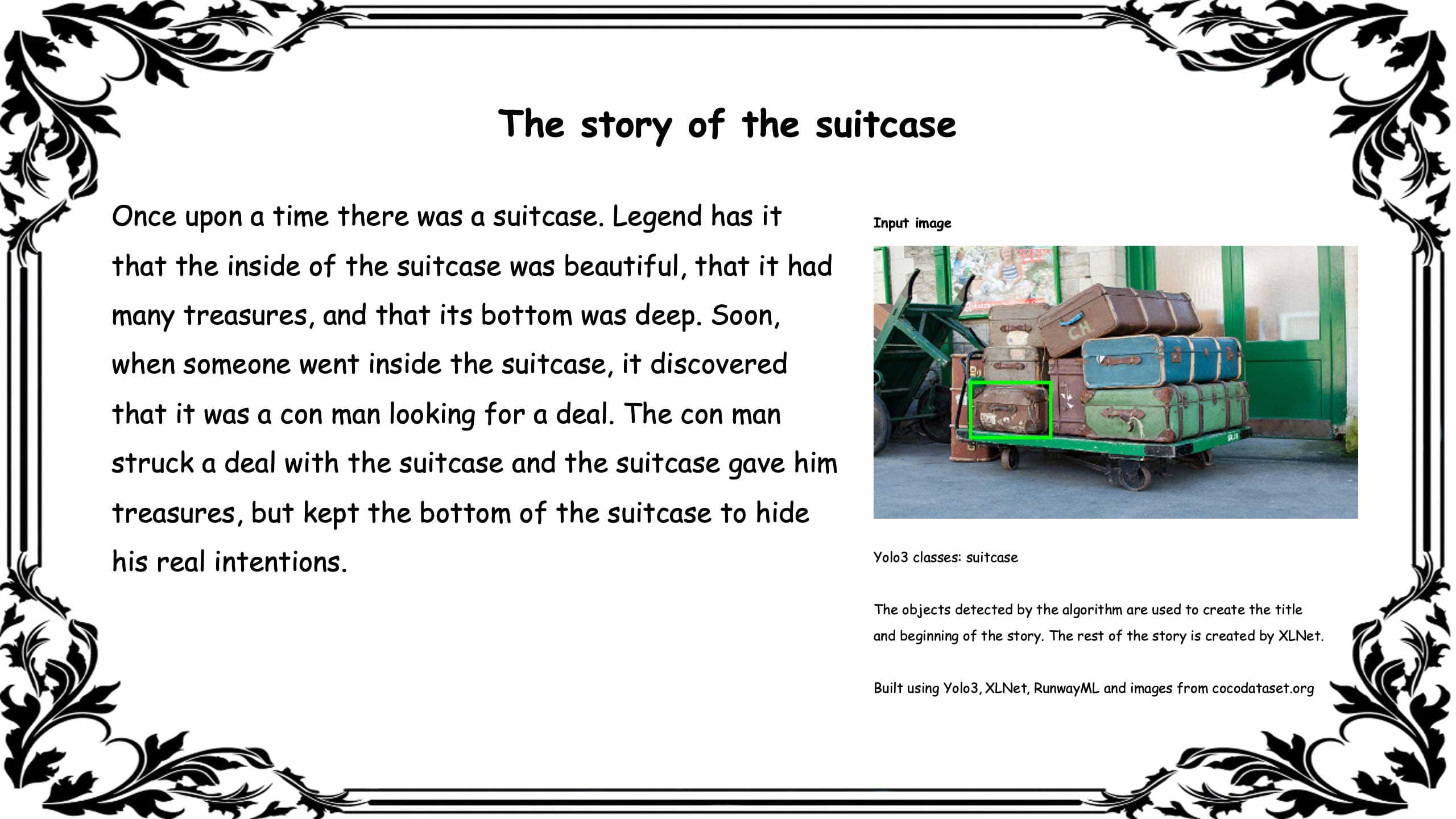

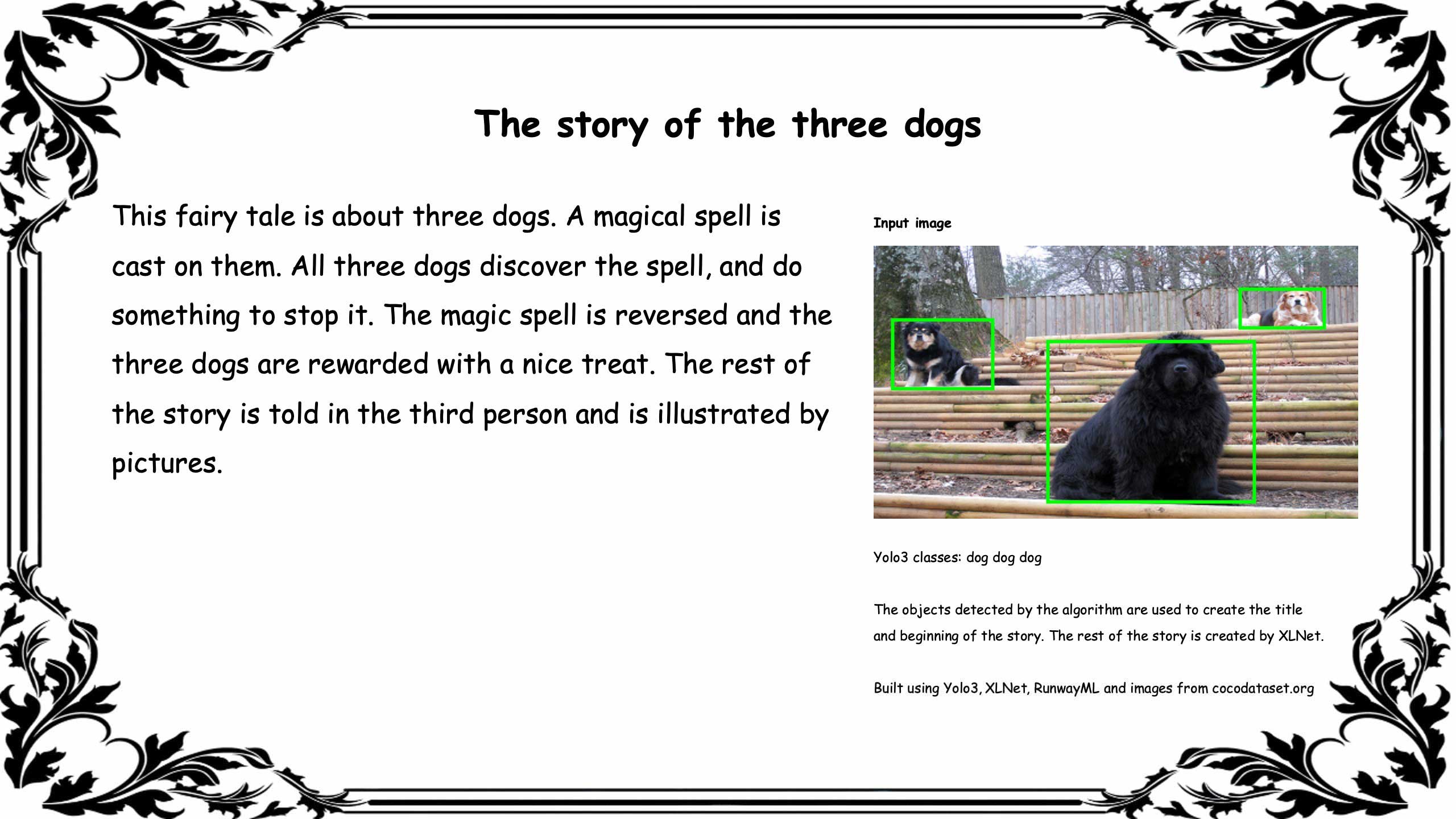

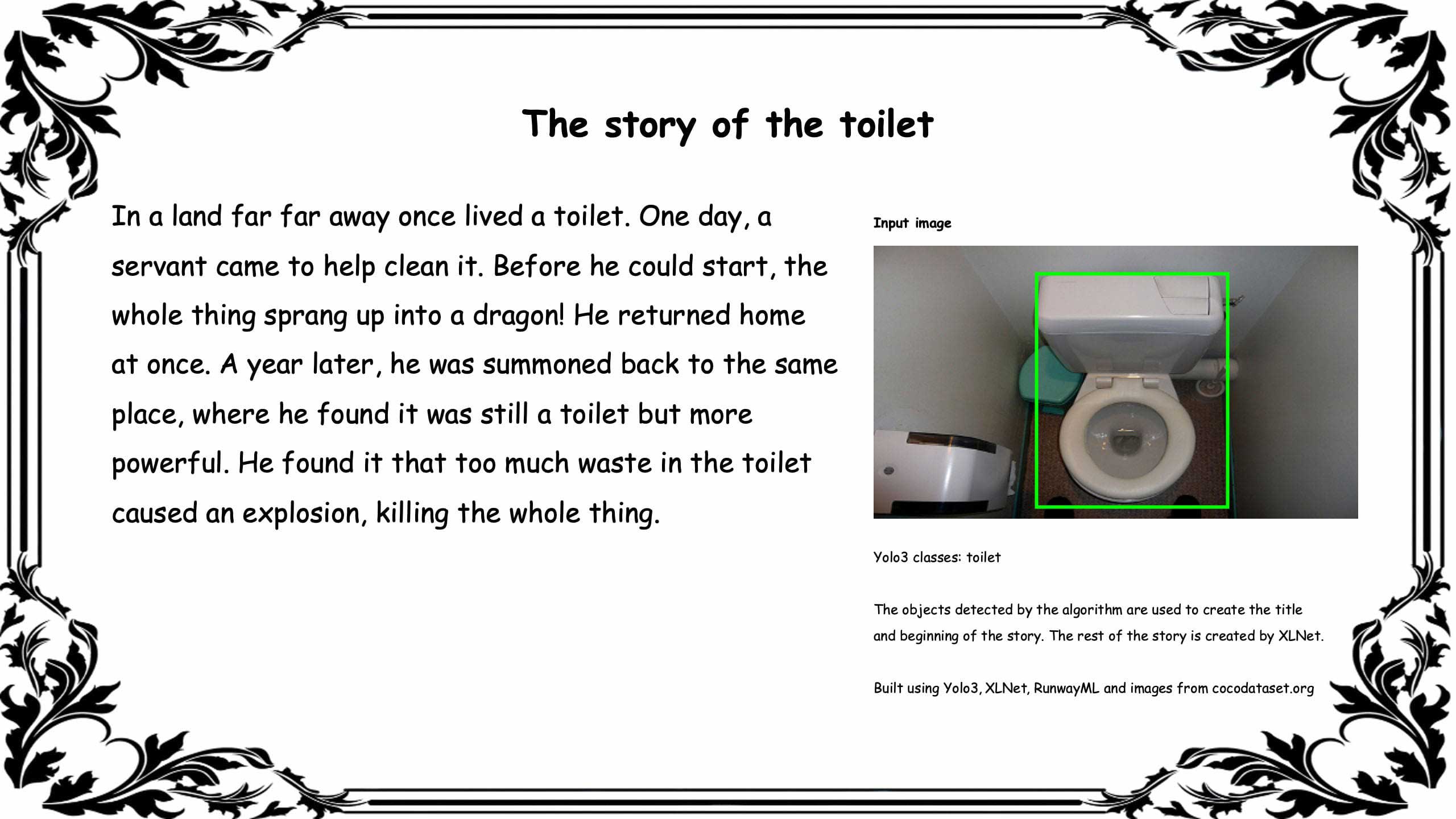

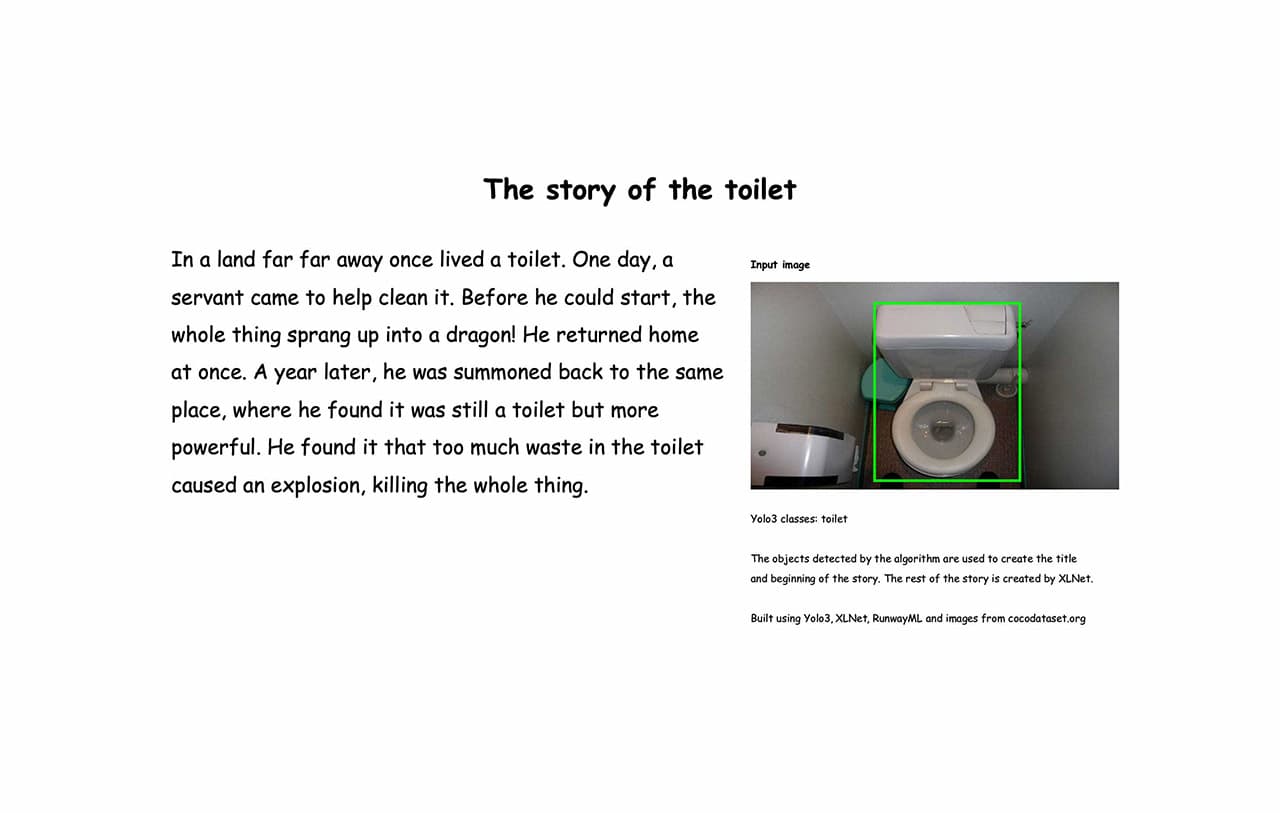

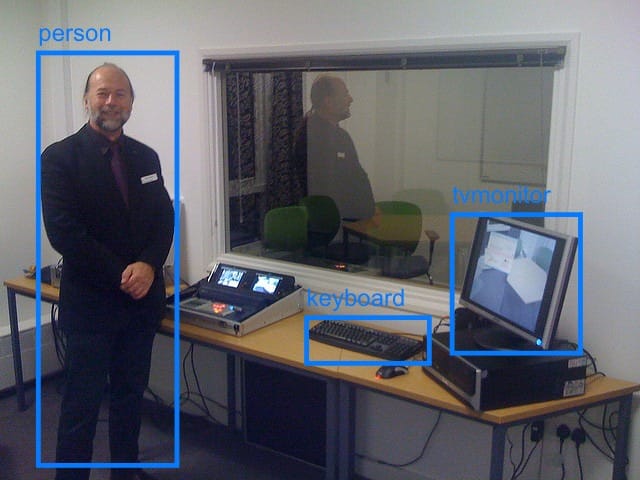

Images of everyday objects are run through the YOLOv3 object detection algorithm, resulting in a list of objects detected (e.g. a person, a keyboard and a tvmonitor).

Step 2: Generate title + opening sentences

Each fairy tales is unique and unpredictable, however the title and the beginning of the stories all follow a structure defined by me. The title is a direct consequence of the detected objects: If two apples and a banana gets detected in the image then the title will automatically become “The story of the two apples and the banana”. The beginning of the stories are not generated, but is instead my attempt to “steer” the algorithm in the desired direction. In order to produce fairy-tale-like stories I feed the XLNet algorithm with predefined strings of text that I have written myself, like: “Once upon a time there was” or “In a land far far away lived” followed by a breakdown of the detected objects, in an attempt to ensure that the generated text stays on track, and is indeed centered around the objects. For instance, if two apples and a banana was detected in the image, the beginning of the story could be: “In a land far far away lived two apples and a banana”. In order to make the story into a fairy tale, I add an additional two or three words such as “Legend has it”, “One day”, “A curse” to set the story off, and try to provoke an adventurous continuation.

Step 3: Feed beginning of fairy tale to XLNet and let it write the rest of the text

What happens after the opening sentences is the result of an algorithm called XLNet trying to predict what words and sentences are the most likely to follow. In other words, I feed the title + the first few sentences to XLNet, which then continues my fairy tale. XLNet is able to do so since it has been trained on a huge corpus of text from BookCorpus, Wikipedia and other sources (for more info on XLNet see https://arxiv.org/pdf/1906.08237.pdf). Once XLNet takes over, I more or less lose control and have no influence over the plot structure, words or themes of the story. But because I ask it to continue a text that begins with a fairy tale structure, and is centered around the detected objects from the image it generally sticks to creating a fairy tale about those objects.

Step 4: Display image, detections + text on screen and save the result

The end result is saved as an image, showing both the generated story, the image and the objects detected.